How to decrease disk usage or find interesting things during investigation on filesystem?

Hi, awesome community!

I hope you are doing well.

In this article, I'd like to share my usage a small util fdupes.

Home page of that project located in that GitHub.

Let's determine to exist use case:

1. We have a huge directory {jira_home}/data/attachments/ or {confluence_home}/attachments. (for bamboo and bitbucket it will not work properly)

In my use case is ~750GB and ~180GB

All those instances are on-premises.

We need to analyze exist disk usage for duplicates and if it is possible to replace by symlinks.

So, without any more stalling, here we go.

1. Install fdupes if it is not in your system.

on RHEL/CentOS-based and Fedora based system

yum install fdupes

dnf install fdupes [On Fedora 22 onwards]

Debian based:

sudo apt-get install fdupes

or

sudo aptitude install fdupes

macOS based:

brew install fdupes

2. Next step is to change to an attachments directory

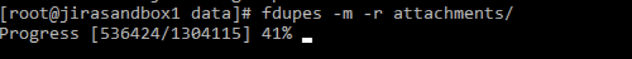

fdupes --recurse --size --summarize ./attachments/3. You can see progress like this:

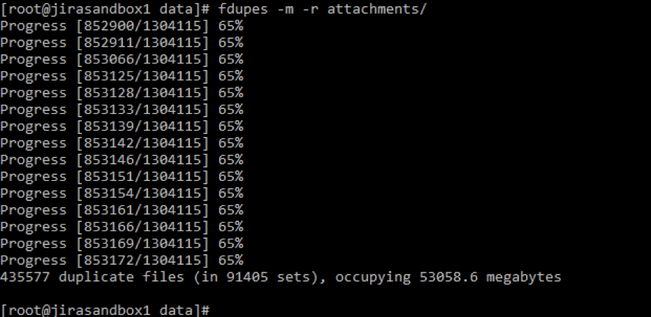

4. And finally I got that result.

5. Only after analyze I suggest to replace by --hardlinks, I totally recommend to check on test files for to do production. Sometimes better to do that per Jira project key directory.

Conclusion:

Doing that analyze, I found causes like exocet, clone plus, custom post-function to clone issue, and mail handlers problems, which generates most of the duplicates. And of course, it can be workflow bottleneck, some duplicate, incorrectly parsing emails.

And please, don't do as a fanatic, because disk usage is the cheapest thing in nowadays. (I hope) ;)

Also, I suggest to read: Hierarchical File System Attachment Storage and Jira attachments structure

I will be happy if community members post here: own statistics.

Maybe will implement disk usage deduplication ;)

P.S.: For windows, please have a look that util jdupes

P.S.S. If you want to do for all files, please, have a look that kvdo , because on lower level. I hope that article will interesting for you ( VDO new linux compression layer ).

P.S.S.S. If you are using NetApp ;), it is out-of-the-box functionality

Cheers,

Gonchik Tsymzhitov

Comments

Post a Comment